Data-intensive applications, such as AI training and inference, climate and Earth modeling, or other complex ensembles of simulations in High Performance Computing (HPC), require the development of data management systems (DMS) that can manage huge volumes of data, on the range of peta and exa-scale, and address challenges related to transfer latency minimisation and throughput optimisation.

From the perspective of HPC end-users, HPC data centers confront some technical limitations on data management, related to security concerns, that restrict access to data through slow, sequential SSH-like connections (e.g., SCP, SFTP). Although the HPC community has developed more efficient parallel data transfer solutions like GridFTP, they are not widely adopted in most of EuroHPC centers, face complex setups for both HPC administrators and end-users, and are not compatible with popular data lakes and other Cloud data storage systems.

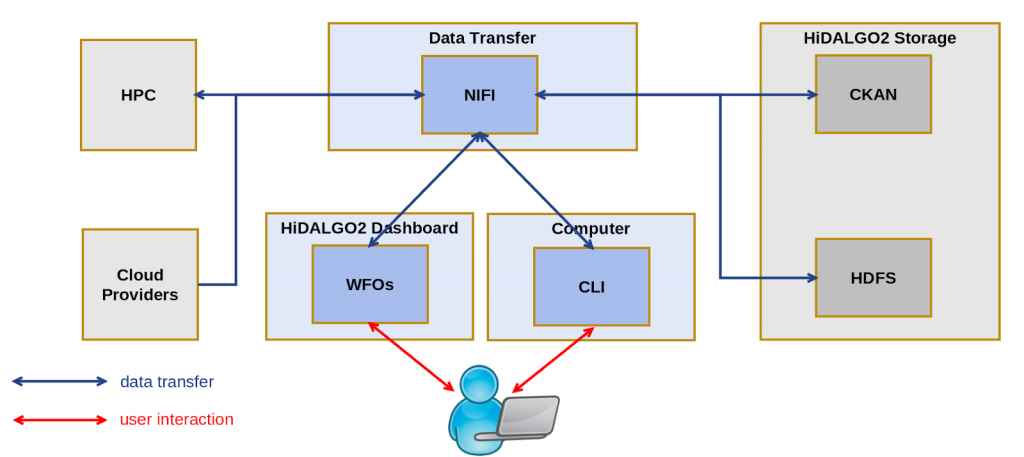

In HiDALGO2, we have designed a Cloud DMS system, external to and compatible with any HPC cluster, intended for end-users, that addresses some of the above challenges. This DMS takes the state of the art on big data and data lakes for huge volumes of data (such as the Hadoop HDFS), data sharing systems (such as CKAN) for publication, distributed data-intensive computing (such as Hadoop YARN) for data exploitation, and ETL/ELT data pipeline engines (such as Apache NIFI) for efficient data transfer (see Figure 1).

The HiDALGO2 DMS is a Cloud platform consisting of:

- A data storage system, based on Hadoop HDFS and CKAN, capable of scaling up to the exascale on demand

- A data transfer system, based on NIFI pipelines, offering quite efficient data transfer rates, based on parallel multiplexers, compatible with SSH-like transfer from Cloud data providers, HiDALGO data storage (HDFS, CKAN), and the end-users’ utility computing, using SFTP/SCP

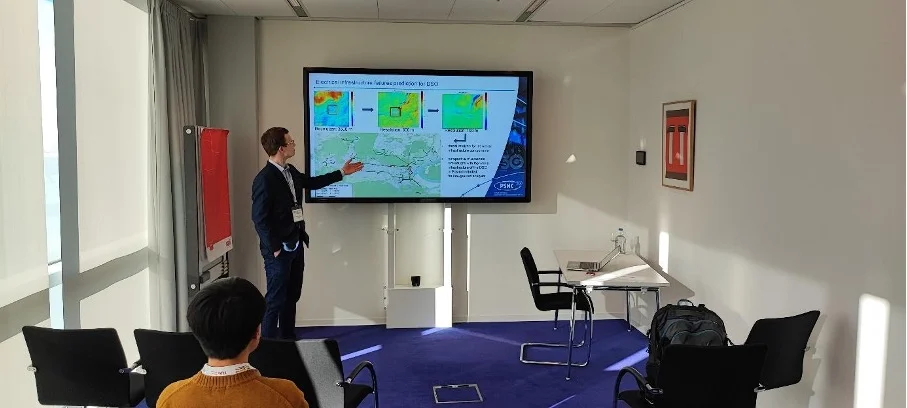

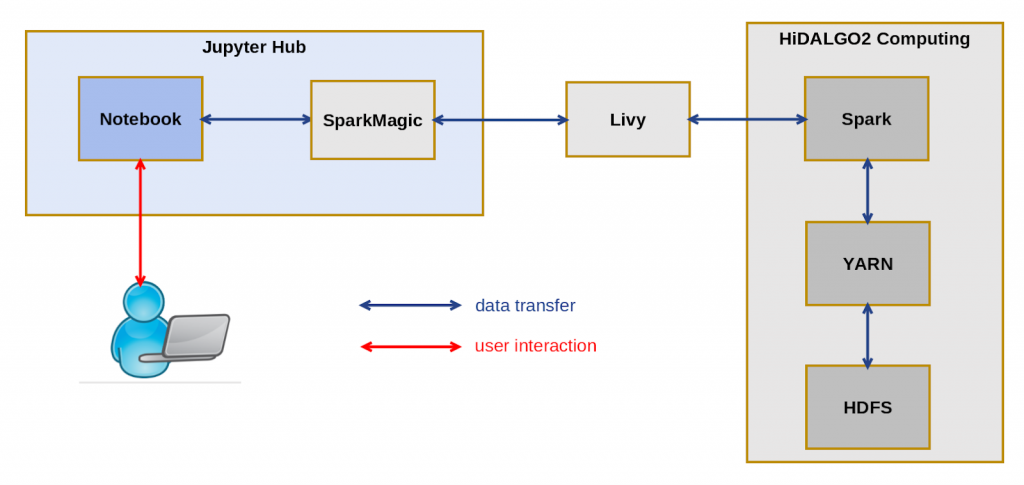

- A data-intensive computing system, based on Hadoop YARN and Spark, for HPDA on HPC simulations data (See Figure 2), that processes the data close to their storage

- A Python library (for tool integration, as adopted by the HiDALGO Workflow Orchestrators) for data transfer from/to Cloud, CKAN/HDFS, and HPC data providers/consumers

- A Python CLI tool for end-users offering the data transfer features supported by the aforementioned library

With the HiDALGO2 DMS, HPC end-users can manage the:

- Provision of data required for their simulations in any EuroHPC center, taken from relevant Cloud providers, such as the European Data Center, or from datasets located at their computing facilities or the HiDALGO2 storage (HDFS or CKAN)

- Execution of HPDA analytics on either the input or output data for or from their HPC simulations, by exploiting the HiDALGO2 HPDA computing platform based on Spark/YARN, and the HiDALGO2 Jupyter Hub notebooks

- Efficient transfer and storage of huge volumes of HPC simulation data on the HiDALGO2 storage (HDFS/CKAN), for further data analytics and visualization, by using the HiDALGO2 Visualization tools and Dashboard

HPC end-users get access to the HiDALGO2 storage either using CKAN (via its Web portal) or the HDFS (via a Web portal based on Cloudera HUE or the HDFS CLI). Data can be transferred from data providers:

- Cloud providers such as NOAA or the ECMWF European data center

- CKAN and HDFS (user datasets)

- HPC centers (user simulation data)

- End-users’ utility storage (e.g., laptops/workstations) to data consumers:

- CKAN and HDFS (user datasets)

- HPC centers (user simulation data)

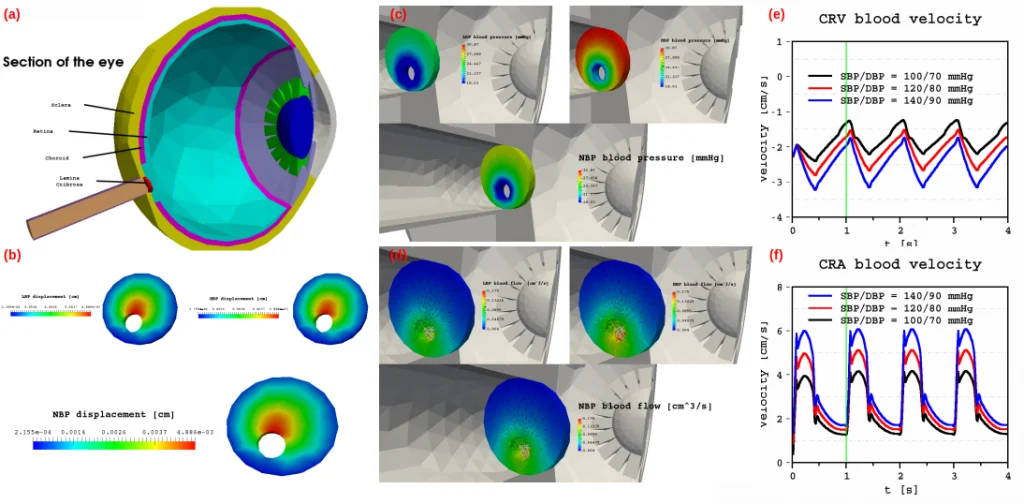

As an example, a common data management and transfer scenario could be as follows. A scientist is simulating in an HPC center the impact of air pollution on the energy efficiency of urban buildings. She requires huge datasets from ECMWF as input for her simulations, to be executed in several EuroHPC data centers. As stochastic uncertainty is a key factor, she is planning to run an ensemble of simulations to estimate their uncertainty. As transferring from ECMWF is quite slow, she sets up a CI/CD pipeline that automates the offline transfer of data from ECMWF to HDFS using the HiDALGO2 data transfer CLI.

When ready, the required datasets are efficiently transferred from HDFS to the target HPC using the data transfer CLI. If needed, for some input datasets, some HPDA analytics are computed in the HiDALGO2 HPDA platform. As soon as the simulations are completed at the target HPC centers, results are transferred back to HDFS for further processing and visualization, as the quota in the target HPC gets exhausted soon. Once final results are obtained, they are transferred to CKAN for sharing and publication. The entire data transfer pipeline can be scheduled within the CI/CD procedure or manually conducted from the user’s laptop by issuing simple commands in the console.

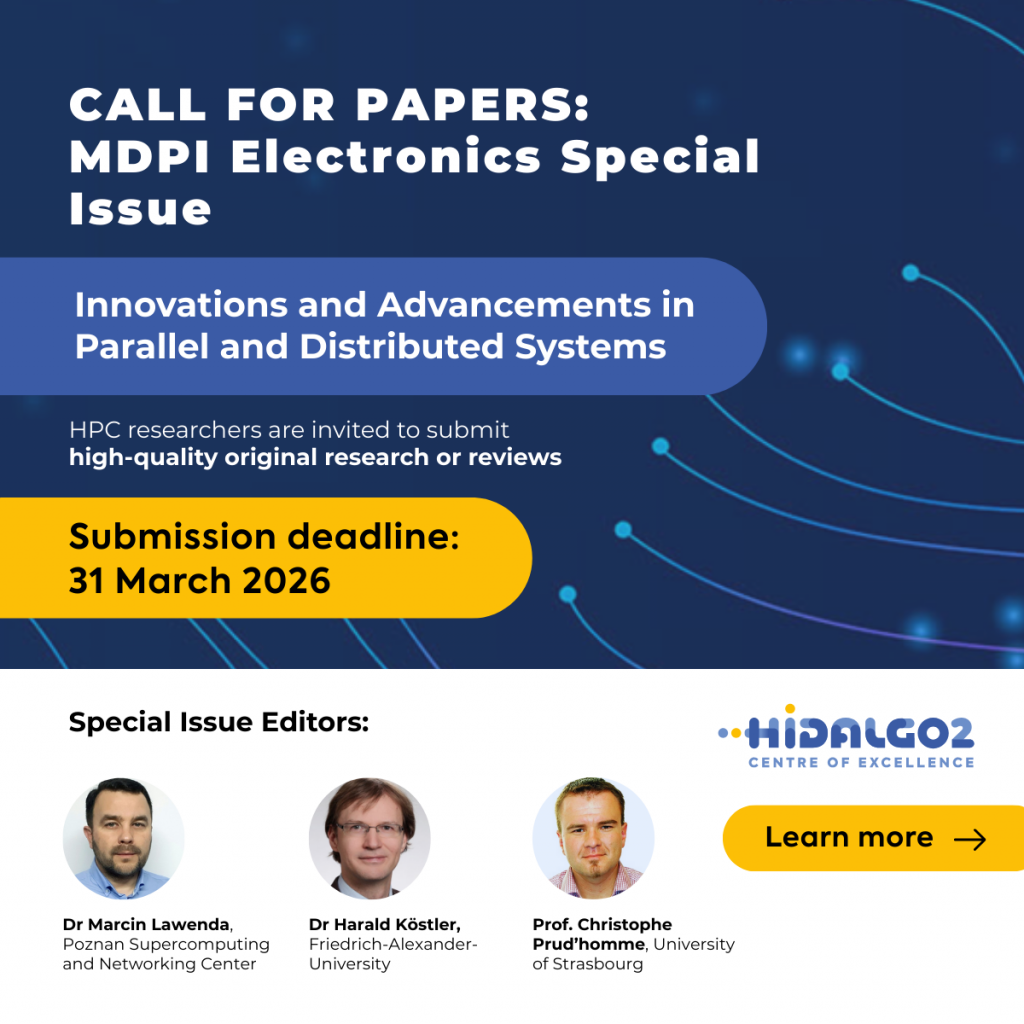

The HiDALGO2 DMS is currently available for the HiDALGO2 pilots at PSNC Cloud. For further information, visit https://www.hidalgo2.eu/

The HiDALGO2 Data Transfer Library is available at:https://pypi.org/project/hid_data_transfer_lib/

The HiDALGO2 Data Transfer CLI tool is available at:https://pypi.org/project/data-transfer-cli/